Instead of an online dashboard or physical indicator, here we look at a very different but interesting way of communicating the moisture level to the user: by sound! Here we talk about the components built to make it work, and how everything is put together!

Moisture to Sound Mapping

This was a simple attempt at making something that could run in the background without being intrusive, but at the same time convey the information (soil humidity) in a meaningful way. So I looked at two basic musical parameters: tempo and key. A faster tempo can correspond to more activity or desperation, while the major/minor keys can imply happy/sad on a basic level. An intuitive mapping would be to increase the tempo, and switch to a sad key as the plant dries out (becoming sadder and more desperate). It would be possible to imagine a more complex series of states as well, but for this example we keep it relatively simple.

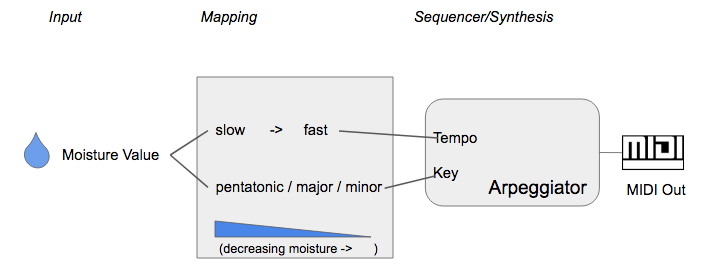

The following diagram shows the overall mapping and how we use the single moisture value to control parameters in a sequencer attached to a sound generator:

Note that for variety we also have a pentatonic scale for the high levels of moisture, switching to major and then minor as the plant dries out. The first part is potentially up for discussion… 😉

Sound Generation

The actual sound generator is a simple algorithmic arpeggiator (based on the MIDI output example code in the mido library) that emits MIDI messages. It is basically a random note generator takes in the following parameters:

- Key: major, minor, pentatonic (strings). This selects the set of notes to draw from

- Tempo: how quickly notes are being generated

- Direction: up, down, random

The code for the sequencer is available here, and requires the python libraries mido and pyOSC. mido is responsible for talking to MIDI ports, while pyOSC provides the interface for receiving messages. The OSC messages are:

/tempo val/key key_name

where val is a floating point number representing the note duration, and key_name is a string that is either “major”, “minor”, or “penta” representing each key. The arpeggiator also takes in a /direction message that can be “up”, “down”, or “random” but we don’t use that for now.

The arpeggiator outputs MIDI note messages, which can be hooked up to an external synthesizer or soft synth. In this example we’re using a CASIO keyboard that is connected via a USB MIDI port but a soft synth on the Raspberry Pi itself can also be used.

System Configuration

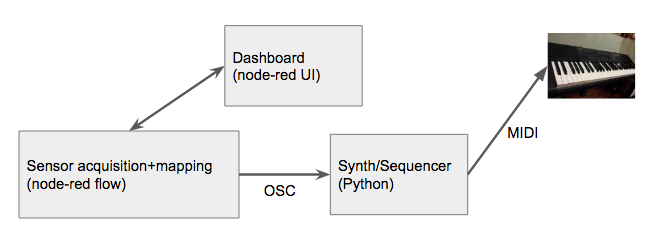

Building upon our previous node-red application, we add OSC formatting and send nodes to talk to the Python sequencer.

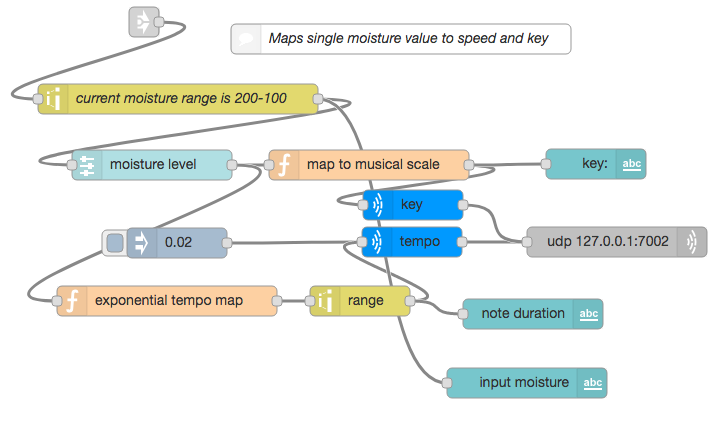

Following is the node-red flow that implements the mapping and outputs some diagnostic info to a dashboard. One of the functional blocks breaks down the moisture range to the three keys (pentatonic, major, and minor), while the tempo map performs a basic exponential scaling of the tempo so there is a sharper change in speed when you get to a critically low moisture value. There is some tuning required to figure out how best to scale the tempo…

The source code for the flow is here (can be imported into your flow using copy+pate).

Further Thoughts

While relatively simple, this example shows the potential power of these connected platforms for experimenting with sensor data. Although there are many separate components, the modular nature of the example allows it to be easily adapted for other applications and use cases. One particular hardware optimization that may be useful for many would be to do away with the hardware MIDI instrument, and instead run a soft MIDI synth. Amsynth has been tested with this configuration and appears to run fine alongside the rest of the system. That’s the power of using well-established protocols like MIDI!

Video demo to come…